Another Apple Addition

Apple’s ever-changing, dynamic iPad has seen its most recent improvement in one of the biggest additions the powerhouse company has made to this product. Apple announced the new iPad Pro in March of 2020, starting at $999 this 12.9-inch model (also coming in a smaller 11-inch size) is “your next computer that is not a computer.” The great addition to this product is the implementation of a new LIDAR system on the rear camera, which Apple argued was the missing piece for revolutionary reality applications.

LIDAR Background

Standing for “Light Detection and Ranging”, LIDAR is not a brand new technology. This type of laser sensor tech has been used in the past for driverless cars, as the tech allowed the cars to detect objects and build 3D maps of their surroundings in near real time. This functioned as a way to “see” other cars on the road, trees, roads, etc. While Apple’s smaller scanner does not quite reach that advanced level, the company claims that the iPad’s camera is capable of measuring the distance to objects over 16 feet away. In addition, with the combination of depth information from the LIDAR scanners, camera data, motion sensors, and computer vision algorithms, the latest iPad Pro will be faster and better at placing augmented reality (AR) objects and tracking the location of people.

Implementing AR

This new sensor is Apple’s latest attempt to make AR a key part of its apps and software. The company has been working on this since 2017 when they introduced their ARKit Platform, a cutting-edge platform for developing AR iOS apps for iPhone and Ipad. In many iOS updates and iPhone launches, Apple has often demonstrated AR experiences such as Minecraft, or a cooperative Lego experience.

Although Apple seems to hype up AR at their demos, there still are not a lot of compelling reasons to actually use AR apps on mobile devices beyond the extravagant demos that the company puts on. AR apps on iOS are unfortunately not an essential part of the Apple experience, yet. Apple has pushed for over three years for AR, but there has not been an app that makes a decent case for why customers or developers should be entranced by the software.

The Missing Piece of the Puzzle

The LIDAR sensor may offer the missing piece of the puzzle. Apple once utilized the tech in a demo which showed off the LIDAR sensor in Apple’s Arcade Hot Lava game, which used data to more quickly and accurately model a living room to generate gameplay surface. There is also a CAD app that can scan and make a 3D model of the room, and another demo that promises accurate determinations of the range and motion of one’s arm. Apple may be laying the groundwork for LIDAR-equipped AR devices (like a new iPhone or iPad), which could allow them to make more immersive, faster, and better augmented apps in the future.

LIDAR Takes to the Skies

Based in Rome, NY, Microdrones was developed through a collaboration between the German inventor of the world’s first commercial quadcopter and a determined surveying payload software developer in North America. Through this collaboration, a global mapping technology was produced: Microdrones. This technology company delivers complete and reliable mapping systems specifically developed for the surveying, mining, construction, oil and gas and precision agriculture industries. Using cutting edge software technology to transform raw data collected in the field by Microdrones survey equipment into high-quality survey grade data. All of this data is collected through LIDAR technology.

Predicting Disaster

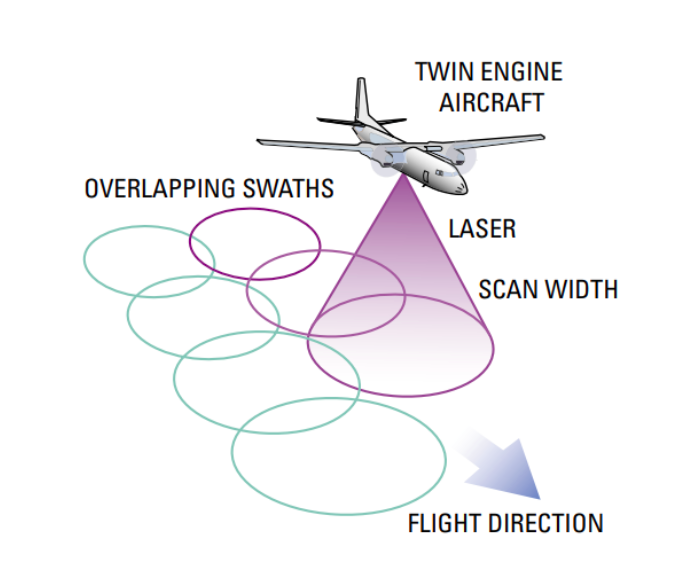

LIDAR technology has the capability to map out a flood before it even happens. LIDAR technology is able to collect high-accuracy data for large areas with a lower cost of traditional methods. Acting as a laser rangefinder in an airplane, LIDAR systems use lasers that pulse tens of thousands of times a second. These pulses turn distance into elevation points. This allows for computer programs (such as flow models) to simulate floods over the entire floodplain, rather than across a select few cross-sections. Previously, this was done manually, and was very expensive, and also only utilized cross-sectional data. With elevation data available from the LIDAR technology, flow can be simulated everywhere, thus allowing for a more detailed and accurate idea of where the water will go during a flood.